Chapter 1: Foundations

The history of human civilisation is a tale of cumulative effort. Each generation builds upon the work of their forebears. Sometimes the work takes a backward step. Sometimes we wander down dead ends. But we struggle on. Bit by bit our species makes progress. Whether the progress is incremental or a huge leap forward, it is always borne upon the accomplishments of those who came before us.

Nowhere is this layered nature of progress more apparent than in the history of technology. Even the most dramatic bounds in technological advancement are only possible when there is some groundwork to build upon.

Gutenberg’s printing press would not have been invented if it weren’t for the work already done in creating the screw press used for winemaking. Technologies aren’t created in isolation. They are imprinted with the ghosts of their past.

The layout of the QWERTY keyboard for your computer—and its software equivalent on your phone—is an echo of the design of the first generation of typewriters. That arrangement of keys was chosen to reduce the chances of mechanical pieces of metal clashing as they sprang forward to leave their mark on the paper.

The hands on a clock face move in a clockwise direction only because that’s the direction that the shadow cast by a sundial moves over the course of a day in the northern hemisphere. Had history turned out differently, with the civilisation of the southern hemisphere in the ascendent, then the hands on our clocks would today move in the opposite direction. As for why those clocks carve out the time in periods of 24 hours, each with 60 minutes, with each minute having 60 seconds, that’s thanks to an ancient Sumerian civilisation. They hit upon using the number 60 as their base for counting and commerce. It’s the lowest number that can be equally divided by the first six numbers. That sexagesimal way of counting is still with us today in the hours, minutes, and seconds that we use as conceptual models for subdividing one rotation of our planet.

These echoes of the past reverberate in the present even when their usefulness has been outlived. You’ll still sometimes see a user interface that displays an icon of a Compact Disc or vinyl record to represent music. That same interface might use the image of a 3½ inch floppy disk to represent the concept of saving data. The reason why floppy disks wound up being 3½ inches in size is because the disk was designed to fit into a shirt pocket. The icons in our software interfaces are whispering stories to us from the history of clothing and fashion.

Let’s share what we know

Scientific progress would be impossible without a shared history of learning to build upon. As Sir Isaac Newton put it, if we have seen further it is by standing on the shoulders of giants.

When knowledge is passed from one generation to the next, theories become more refined, units of measurement become standardised, and experiments increase in precision.

Right now humanity’s most precise experiments are being conducted beneath the border between Switzerland and France. This is the home of CERN, the European Organisation for Nuclear Research. In the 16‐mile wide ring of its Large Hadron Collider, protons are being smashed together at velocities approaching the speed of light. Our primate species is recreating the conditions from the start of our universe. The LHC is the most complex piece of machinery that has ever been built.

The awe‐inspiring engineering of the LHC is matched by the unprecedented levels of international co‐operation behind CERN. The particle accelerator became operational in the first decade of the 21st century but the groundwork was laid more than half a century before. That was when a group of nations came together to create the CERN Convention, dedicating resources and money towards pure scientific research. The only return on investment they expected was in the currency of knowledge.

This groundwork created a unique environment free from the constraints of national, economic, and social hierarchies. Nobel prize‐winning physicists collaborate with students on summer internships. If there is an element of social categorisation at CERN, it is only between theorists and experimentalists. The theorists are the ones with blackboards in their offices. The experimentalists are the ones with computers. They have to deal with a lot of data. Even before the Large Hadron Collider was switched on, managing information was a real challenge at CERN.

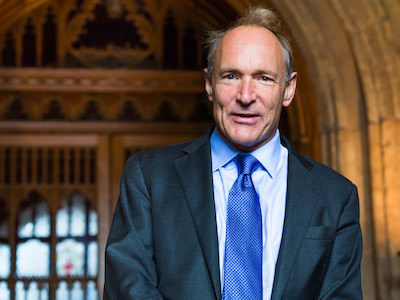

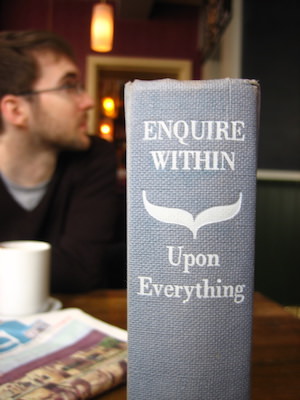

Enter Tim Berners‐Lee, a computer scientist from England who found himself working at CERN in the 1980s. At the beginning of that decade, he started a personal project to get to grips with managing information. The resulting software was called ENQUIRE, named for a Victorian manual of domestic life called Enquire Within Upon Everything.

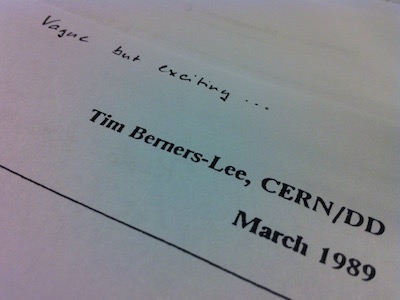

By the end of the ’80s, Tim Berners‐Lee was ready to tackle the thorny problem of information management on a larger scale. In order to get buy‐in at CERN, he produced an unassuming document with the title Information Management: A Proposal. Fortunately his supervisor, Mike Sendall, recognised the potential of the idea and gave the go‐ahead by scrawling the words “vague but exciting” across the top of the paper. That proposal would become the World Wide Web.

Net value

Today we think of the World Wide Web as one of the greatest inventions in the history of communication, but to the scientists at CERN it is merely a byproduct. When you’re dealing in cosmological timescales and investigating the very building blocks of reality itself, the timeline of humanity’s relationship with technology is little more than a rounding error.

When Tim Berners‐Lee decided to take on the problem of information management at CERN, the internet was already established as part of the infrastructure there. This network of networks was first created in the 1960s and the early adopters were universities and scientific institutions.

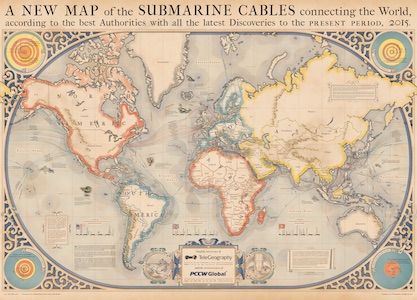

These nodes were already physically connected via telephone wires. Rather than build an entirely new network from scratch, it made sense to reuse what was already there. Once again, a new technology was only made possible by the existence of an older one. In the nineteenth century the world was technologically terraformed for the telegraph. Through astonishing feats of engineering, our planet was wired up with undersea cables. Those same cables would later be repurposed to carry telephone signals. Later again, they would carry the digital ones and zeros of the internet. Today those signals are transmitted via pulses of light travelling through fibre‐optic cables. Those fibre‐optic cables follow the same paths across the ocean floor as their telegraphic antecedents.

The internet has no centre. This architectural decision gives the network its robustness. You may have heard that the internet was designed to resist a nuclear attack. That’s not entirely correct. It’s true that the project began with military considerations. The initial research was funded by DARPA, the Defense Advanced Research Projects Agency. But the engineers working on the project were not military personnel. Their ideals had more in common with the free‐speech movement than with the military‐industrial complex. They designed the network to route around damage, but the damage they were concerned with was censorship, not a nuclear attack.

The open architecture of the internet reflected the liberal worldview of its creators. As well as being decentralised, the internet was also deliberately designed to be a dumb network. That’s its secret sauce. The protocols underlying the transmission of data on the internet—TCP/IP—describe how packets of data should be moved around, but those protocols care not a whit for the contents of the packets. That allows the internet to be the transport mechanism for all sorts of applications: email, Telnet, FTP, and eventually the World Wide Web.

Hyperspace

The web uses HTTP, the HyperText Transfer Protocol, to send and receive data. This data is uniquely identified with a URL. Many of these URLs identify pages made of HTML, the HyperText Markup Language. The killer feature of the web lies here in HTML’s humble A element. The A stands for Anchor. Its HREF attribute allows you to cast off from within one URL out to another URL, creating a link that can be traversed from one page to the other. These links turn the web from being a straightforward storage and retrieval system into a hypertext system.

Tim Berners‐Lee did not invent hypertext. That term was coined by Ted Nelson, a visionary computer scientist who was working on his own hypertext system called Xanadu. Both Ted Nelson and Tim Berners‐Lee were influenced by the ideas set out by Vannevar Bush in his seminal 1945 essay, As We May Think. Bush, no doubt, was in turn influenced by the ideas of Belgian informatician Paul Otlet. Each one of these giants in the history of hypertext was standing on the shoulders of the giants that had come before them. Giants all the way down.

Compared to previous grand visions of hypertext, links on the web are almost laughably simplistic. There is no two‐way linking. If you link to another URL, but the page at that URL is moved or destroyed, you have no way of knowing.

But the simplicity of the web turned out to be the secret of its success.

Tim Berners‐Lee assumed that most URLs would point to non‐HTML resources; word‐processing documents, spreadsheets, and all sorts of other computer files. HTML could then be used to create simple index pages that point to these files using links. Because HTML didn’t need to do very much, it had a limited vocabulary. That made it relatively easy to learn. To Tim Berners‐Lee’s surprise, people began to create fully‐fledged documents in HTML. Instead of creating content in other file formats and using HTML to link them up, people began writing content directly in HTML.

Mark me up, mark me down

HTML wasn’t the first markup language to be used at CERN. Scientists there were already sharing documents written in a flavour of SGML—Standard Generalized Markup Language. Tim Berners‐Lee took this existing vocabulary from CERN SGML and used it as a starting point for his new markup language. Once again, it made sense to build on what people were already familiar with rather than creating something from scratch.

The first version of HTML contained a grand total of 21 elements. Many of those elements are still with us today—TITLE, P, UL, LI, H1, H2, etc., and of course, the A element. Others have fallen by the wayside—ISINDEX, PLAINTEXT, LISTING, HP1, HP2, etc., as well as a proprietary element called NEXTID that only made sense if you were using a computer running the NeXTSTEP operating system. That’s the OS that Tim Berners‐Lee was using when he created HTTP, HTML, and the world’s first web browser, called confusingly WorldWideWeb, which only worked on NeXT machines.

To demonstrate the power and interoperability of the web, a cross‐platform browser was needed; one that anybody could install and use, no matter what operating system they were using. The task of building that browser fell to an undergraduate at CERN named Nicola Pellow. She created the Line Mode Browser. It was simple but powerful. It didn’t have the same level of interactivity as the WorldWideWeb browser, but the fact that it could be run on any machine meant that the web was now accessible to everyone.

As soon as there were two web browsers in the world, interoperability and backwards compatibility became important issues. For instance, what should the Line Mode Browser do when it encounters an HTML element it doesn’t understand, such as NEXTID?

The answer can be found in the sparse documentation that Tim Berners‐Lee wrote for his initial collection called HTML Tags. Under the heading “Next ID” he wrote:

Browser software may ignore this tag.

This seemingly innocuous decision would have far‐reaching consequences for the future of the World Wide Web.